Int32 serialization in OCaml

Posted: October 4, 2013 Filed under: OCaml, Programming 5 CommentsToday, I’m going to talk a bit about the problem of serializing an int32 in OCaml. As I’m only working on Intel machines, I’m not interested in portability, and prefer little-endian serialization. This should be natural and easy.

The interface

val set32: string -> int -> int32 -> unit val get32: string -> int -> int32

The microbenchmark

We’re going to store an int32 into a string,retrieve it, and check if it’s the same. We’re going to do this 1_000_000_000 times, see how long it took, and calculate the speed.

let benchmark n =

let t0 = Unix.gettimeofday() in

let s = String.create 4 in

let limit = Int32.of_int n in

let rec loop i32 =

if i32 = limit

then ()

else

let () = set32 s 0 i32 in

let j32 = get32 s 0 in

assert (i32 = j32);

loop (Int32.succ i32)

in

let () = loop 0l in

let t1 = Unix.gettimeofday () in

let d = t1 -. t0 in

let speed = float n /. d in

let megaspeed = speed /. 1000000.0 in

Printf.printf "%i took %f => %fe6/s\n" n d megaspeed

Attempt 0: Naive implementation

This is rather straight forward: mask, extract the char, store, shift and repeat. Retrieving the int32 from the string is the opposite. No rocket surgery here.

This is simple, readable code.

let set32_ocaml s pos (i:int32) =

let (>:) = Int32.shift_right_logical in

let (&:) = Int32.logand in

let mask = Int32.of_int 0xff in

let to_char v = Char.chr (Int32.to_int v) in

let too_far = pos + 4 in

let rec loop p i =

if p = too_far

then ()

else

let vp = i &: mask in

let cp = to_char vp in

let () = s.[p] <- cp in

loop (p+1) (i >: 8)

in

loop pos i

let get32_ocaml s pos =

let (<:) = Int32.shift_left in

let (|:) = Int32.logor in

let to_i32 c = Int32.of_int (Char.code c) in

let rec loop acc p =

if p < pos

then acc

else

let cp = s.[p] in

let vp = to_i32 cp in

let acc' = (acc <: 8) |: vp in

loop acc' (p-1)

in

loop 0l (pos + 3)

OCaml is a nice high level language, but this bit twiddling feels rather clumsy and ugly.

Anyway, let’s benchmark it.

| Strategy | Speed |

|---|---|

| naive OCaml | 16.0e6/s |

A quick peek at how Thrift does it

let get_byte32 i b = 255 land (Int32.to_int (Int32.shift_right i (8*b)))

class trans = object(self)

val ibyte = String.create 8

...

method writeI32 i =

let gb = get_byte32 i in

for i=0 to 3 do

ibyte.[3-i] <- char_of_int (gb i)

done;

trans#write ibyte 0 4

Ok, this uses the same strategy; but there’s a for loop there. The conversion is done in the ibyte buffer and then copied along. It’s a bit sub-awesome, but the extra copy of 4 bytes shouldn’t be too costly neither.

Attempt 1: But in C, it would be way faster

It’s a platitude I hear a lot, but in this case, it really should be faster. After all, if you want to retrieve an int32 from a string, all you need to do is to cast the char* to an int32_t* and de-reference the value.

Let’s try this:

external set32 : string -> int -> int32 -> unit = "zooph_set32" external get32 : string -> int -> int32 = "zooph_get32"

#include <stdint.h>

#include <stdio.h>

#include <caml/alloc.h>

#include <caml/memory.h>

#include <caml/mlvalues.h>

value zooph_set32(value vs, value vpos, value vi){

CAMLparam3(vs, vpos, vi);

char* buf = String_val(vs);

int pos = Int_val(vpos);

int32_t i = Int32_val(vi);

char* buf_off = &buf[pos];

int32_t* casted = (int32_t*)buf_off;

casted[0] = i;

CAMLreturn (Val_unit);

}

value zooph_get32(value vs,value vpos){

CAMLparam2(vs,vpos);

CAMLlocal1(result);

char* buf = String_val(vs);

int pos = Int_val(vpos);

char* buf_off = &buf[pos];

int32_t* casted = (int32_t*)buf_off;

int32_t i32 = casted[0];

result = caml_copy_int32(i32);

CAMLreturn(result);

}

I called my compilation unit zooph.c an onomatopoeia that pays tribute to how fast I expect this to be. There’s no loop, and the machine has the skills to do the transformation in one step. So it should roughly be about 4 times faster.

Let’s benchmark it.

| Strategy | Speed |

|---|---|

| naive OCaml | 16.0e6 |

| C via FFI | 32.3e6 |

Hm… it’s faster allright, but it’s also a bit disappointing. So what went wrong?

A quick look at the assembly code reveals a lot:

zooph_set32: .LFB34: .cfi_startproc movl 8(%rdx), %eax sarq %rsi movslq %esi, %rsi movl %eax, (%rdi,%rsi) movl $1, %eax ret .cfi_endproc .LFE34: .size zooph_set32, .-zooph_set32 .p2align 4,,15 .globl zooph_get32 .type zooph_get32, @function zooph_get32: .LFB35: .cfi_startproc pushq %rbx .cfi_def_cfa_offset 16 .cfi_offset 3, -16 movq %rsi, %rdx sarq %rdx subq $160, %rsp .cfi_def_cfa_offset 176 movslq %edx, %rdx movq caml_local_roots(%rip), %rbx leaq 8(%rsp), %rcx movq %rdi, 8(%rsp) movl (%rdi,%rdx), %edi movq %rsi, (%rsp) movq $1, 32(%rsp) movq %rcx, 40(%rsp) leaq (%rsp), %rcx movq %rbx, 16(%rsp) movq $2, 24(%rsp) movq $0, 152(%rsp) movq %rcx, 48(%rsp) leaq 16(%rsp), %rcx movq $1, 96(%rsp) movq $1, 88(%rsp) movq %rcx, 80(%rsp) leaq 80(%rsp), %rcx movq %rcx, caml_local_roots(%rip) leaq 152(%rsp), %rcx movq %rcx, 104(%rsp) call caml_copy_int32 movq %rbx, caml_local_roots(%rip) addq $160, %rsp .cfi_def_cfa_offset 16 popq %rbx .cfi_def_cfa_offset 8 ret .cfi_endproc

While zooph_set32 seems to be in order, its counter part is rather messy. On closer inspection, not even the set32 side is optimal. OCaml’s FFI allows smooth (at least compared to jni) interaction with native code in other languages, it also installs a firm border across which no inlining is possible (not with OCaml that is).

Let’s take a look at how the benchmark code calls this.

.L177: movq %rbx, 8(%rsp) movq %rax, 0(%rsp) movq $1, %rsi movq 16(%rbx), %rdi movq %rax, %rdx movq zooph_set32@GOTPCREL(%rip), %rax call caml_c_call@PLT .L179: movq caml_young_ptr@GOTPCREL(%rip), %r11 movq (%r11), %r15 movq $1, %rsi movq 8(%rsp), %rax movq 16(%rax), %rdi movq zooph_get32@GOTPCREL(%rip), %rax call caml_c_call@PLT .L180: movq caml_young_ptr@GOTPCREL(%rip), %r11 movq (%r11), %r15 movslq 8(%rax), %rax movq 0(%rsp), %rdi movslq 8(%rdi), %rbx cmpq %rax, %rbx je .L176

You see stuff being pushed on the stack before the call. For raw speed, you don’t want this to happen. For raw speed, you don’t even want a call.

To get there, you need to translate the benchmark to C too. I’m not going to bother, because I have another trick ready.

Attempt 2: OCaml 4.01 primitives

OCaml 4.01 got released recently, and there’s a little entry in the release notes.

PR#5771: Add primitives for reading 2, 4, 8 bytes in strings and bigarrays

(Pierre Chambart)

However, for some reason, they are not really exposed, and I had to dig to find them. Using them however is trivial.

external get32_prim : string -> int -> int32 = "%caml_string_get32" external set32_prim : string -> int -> int32 -> unit = "%caml_string_set32"

That’s all there is to it. Basically, you say that you know that the compiler knows how to do this, and that from now on, you want to do that too.

Let’s benchmark it.

| Strategy | Speed |

|---|---|

| naive OCaml | 16.0e6 |

| C via FFI | 32.3e6 |

| OCaml with primitives | 139e6 |

Wow.

Closing words

I’ve put the code for this on github: https://github.com/toolslive/int32_blog Anyway, we need to (de)serialize int64 values as well. Determining the speedup there is left as an exercise for the reader (tip: it’s even better).

I think some people will feel the urge to apply this to their serialization code as well.

Have fun,

Romain.

Arakoon 1.6.0 is out

Posted: July 8, 2013 Filed under: Arakoon | Tags: arakoon, distributed systems, optimization, paxos 2 CommentsH: One voter, 16,472 votes — a slight anomaly…?

E: Not really, Mr. Hanna. You see, Baldrick may look like a monkey who’s

been put in a suit and then strategically shaved, but he is a brillant

politician. The number of votes I cast is simply a reflection of how

firmly I believe in his policies.Black Adder III, Episode 1

Introduction

So, Arakoon 1.6.0 is out, a release so huge we just had to leap minor increments! This blog post will walk you through the various changes between the 1.4 series and the 1.6 series. We’ll explain why it’s faster, and give an indication by how much.

Harvesting the client queue

Arakoon is a multipaxos implementation. This means that arakoon nodes try to gain consensus on what the next transaction is that needs to be applied.

The thing they gain consensus on is called a paxos value. In all Arakoons (≤ 1.4) there was a 1:1 mapping between a client update and a paxos value. This all works fine in LAN, but when the latency between nodes becomes a lot higher, like in a WAN setup, performance becomes abysmally slow. But some of your users are using Arakoon in a multi-datacenter setup. The solution chosen to address this was to have a n:1 mapping between client updates and paxos values. What Arakoon 1.6 does when the time has come to decide on the next paxos value, is to take all (or up to a limit) of the queued updates, and stuff them in the next paxos value. For multiple clients and small updates this means an almost linear speedup in a WAN setting.

Batched Local store

Ops: We have seen a corrupt tokyo cabinet, and we can reproduce it!

Dev: Great! What’s the scenario?

Ops: It’s really easy. Just apply the following 218 million transactions to an empty store.

Dev: Err, euhm, thx ?!

When you want to fix a problem, having a 100% reproducible scenario is a blessing, but not if it takes forever. Fortunately, during the root cause analysis of such a problem, you have time to think, and thus the insight came: We can speed this up a lot! Having to maintain a transaction log is a mixed blessing, but one of the advantages is that you don’t have to apply all updates to the database immediately. So we added an in-memory caching layer that sits before Tokyo Cabinet. All updates happen there and from time to time, we push the updates through in a big batch. We can afford ourselves to do this as we already ensured durability via the transaction logs.

Logging improvements

Since the early days, Arakoon has a crash logger. When an Arakoon node dies unexpectly, it dumps its last 1000 or so log statements in a separate file. This is a really useful feature, and allowed us to determine the underlying cause of death in many occasions. Unfortunately, it was also a very expensive feature: We were always generating debug level log statements, even if the configuration stated ‘info’. Logging is expensive, even if you throw the log statements away. There is a big cost in the generation of all these strings via fancy Printf templates. So we decided to use a syntax extension that allows us to create these strings more lazily.

So now we have the best of both worlds: when all goes well, we don’t need debug statements and we never pay the cost of constructing the string, but when things turn sour,

we can still go back and create our crash log.

Numbers please

We’re not going to show full fledged benchmarks, just a simple microbenchmark to show a trend. The experiment is to let a client (or more clients) do a million sets to an Arakoon cluster and see how long it takes. All experiments were performed on a simple Intel Core i7 with a HDD.

| 1e6 sets/client | Arakoon 1.4.3 | Arakoon 1.6.0 |

| 1 client | 294s | 196s |

| 4 // clients | 900s | 625s |

| 8 // clients | 2180s | 1150s |

How to read the table? 1 client does 1e6 sets on Arakoon 1.6.0 and finishes after 196s. 8 clients do 1e6 sets in parallel on Arakoon 1.6.0 and the last client finishes after 1150s. That looks like a rather nice improvement.

The next table is for a cluster of 3 nodes, all running on the same machine and thus sharing the same HDD

| 1e6 sets/c | Arakoon 1.4.3 | Arakoon 1.6.0 |

| 1 client | 1090s | 676s |

| 4 // clients | 4005s | 2470s |

| 8 // clients | 8734s | 4700s |

A last table shows the effect of latency. We add 50ms latency on everything that goes over the loopback interface (via netem). Again, this is a setup with 3 nodes, all on the same host, sharing a hdd (although this will not matter too much).

| 1e5 sets/c | Arakoon 1.4.3 | Arakoon 1.6.0 |

| 8 // clients | 86400 | 20600 |

Note that this benchmark only goes to 1e5 (otherwise, it would take forever) You can immediately see the dramatic effect of latency on a distributed system. Arakoon 1.6.0 does a better job at fighting latency than Arakoon 1.4.3 (the difference in performance will increase with the number of clients).

Other notable improvements

Tokyo Cabinet patched

The whole data corruption annecdote eventually lead to a 32 bit overflow issue in tokyo cabinet. With the help of some friends, we fixed it and made our changes available here in this tokyo cabinet git repo. We really don’t want to expose anyone to database corruption and decided to backport this to the 1.4 series too.

Log sections

We started to use log sections, which allows you to configure a node such a way that for example the messaging layer is in debug, while everything else stays on info level. Which allows you to put the focus on something you’re examining while not having to wade through zillions of log messages. Handy for ops and devs alike.

Client API additions

We added a 2 small but useful methods to the client API. First there’s AssertExists of key that allows you to shortcut a sequence if a key is not there. Second, there’s multi_get_option: key list -> value option list Lwt.t which allows you to fetch a series of values, even if you’re not sure all of them exist in the database.

Always sync

Some of our users have fancy SSDs for the tlogs and wanted to be able to fsync after every write of a tlog entry. We accomodated them.

Full details

You can find the full list of improvements for Arakoon 1.6 in our bug tracker

Conclusion

It’s stable, way faster than it used to be, and it’s free.

You can get it from our githup repo

What are you waiting for?

Interludium: It’s Doomsday!

Posted: February 28, 2013 Filed under: algorithms | Tags: conway, doomsday, ocaml geek Leave a commentAnd Now for Something Completely Different (Monty Python’s Flying Circus)

Introduction

This post explains how to calculate the day of the week, with all calculations inside your head.

It’s a geekish party trick, and doesn’t really belong on a research blog,

but since today is in fact doomsday, I feel I can get away with it.

Anyway, here follows the algorithm written down in ocaml, but optimized for my brain.

Doomsday

Doomsday is the most important day of the year. It’s the last day of February:

February 28th on normal years, February 29th on leap years.

If you know what day of the week it is on Doomsday, you quite easily adjust for any other day of the year.

The trick is to have an anchor in each month that is the same day of the week as Doomsday.

Anchors for each month

The Anchor for February is doomsday itself.

The anchor for another even month m is m. The anchor for most odd months can be remembered by

the following mnemonic:

I work 9-5 at the 7-11

It simply helps you remember that doomsday for the 9th month is the fifth day of that month and vice-versa.

This only leaves January and March. March is easy: the zeroth of March is the last day of February, which is doomsday. January is a bit more difficult: most years it’s January 3rd, on leap years it’s the 4th. The ocaml summary is this:

let anchor_of_month yy = function (* "I work 9-5 at the 7-11" *)

| 1 -> 3 + leap yy

| 2 -> 28 + leap yy

| 3 -> 0 (* last day of Feb is zeroth day of Mar *)

| 5 -> 9

| 9 -> 5

| 7 -> 11

| 11 -> 7

| m -> m

An example:

- 25/12/2013 Christmas

- Today (28/2/2013 = Doomsday 2013) is a Thursday

- Hence, December 12 (12/12) is also a Thursday.

- 19 Thu, 26 Thu, 25 is a Wednesday.

This is all you need to know to be able to calculate the day of the week for any day in the current year.

The funny thing is that this is rather simple to explain to humans, but not so trivial to formalize.

Here’s an attempt:

let day_of_week hh yy mm dd =

let doom = anchor_of_month yy mm

and w = of_year hh yy

in

if doom >= dd

then zoom_down doom w dd

else zoom_up doom w dd

The two zoom functions are what we do when we move from the anchor in the month to the day we want.

let rec zoom_down known w dd =

if known = dd then days.(w)

else

let t = known -7 in

if t > dd

then zoom_down t w dd

else zoom_down (known - 1) (prev w) dd

let rec zoom_up known w dd =

if known = dd then days.(w)

else

let t = known + 7 in

if t < dd

then zoom_up t w dd

else zoom_up (known + 1) (next w) dd

If this were code written for a computer, I would have distilled something more compact, but my brain doesn’t handle higher order functions very well.

Doomsday for other years

The most frequent use of the doomsday algorithm as a party trick is the calculation the day of the week of someone’s birthday. The algo will yield a number, which you will interprete as a day of the week.

Days are numbered like this:

| Sun | 0 |

| Mon | 1 |

| … | … |

The next thing you need to know is the adjustment for the century.

For 19YY years it’s 3 (WEDnesday) while for 20YY years, it’s 2 (Y-TUE-K).

There are rules for other centuries, but I didn’t learn them, as I don’t frequent vampire parties.

The formula I tend to use to calculate the doomsday of a year is shown below.

let of_year hh yy =

let start = match hh with

| 19 -> 3 (* WE'D be in this day *)

| 20 -> 2 (* Y-Tue-K *)

in

let d12 = yy / 12 in

let r12 = (yy mod 12) in

let r4 = r12 / 4 in

(start + d12 + r12 + r4) mod 7

For some years it’s simpler to move the mod 7 up a bit.

Some tests

If you want to practice this, you need some special dates. Below are some exercises.

29/02/2013 => Thu

25/05/2013 => Sat

25/12/2013 => Wed

01/01/2013 => Tue

11/09/2001 => Tue

06/10/1973 => Sat

03/11/1971 => Wed

20/07/1969 => Sun

08/05/1945 => Tue

11/11/1918 => Mon

Closing words

I picked this up on various resources. The good stuff comes from there, the mistakes are my own.

- Doomsday_rule page on wikipedia

- Rudy Limeback’s page on the doomsday algorithm

- Mind performance hacks (O’Reilly)

Have fun,

Romain.

User functions in Arakoon

Posted: February 1, 2013 Filed under: Arakoon, Baardskeerder, OCaml, Programming, Uncategorized | Tags: arakoon, baardskeerder, key value store, OCaml, user functions 1 CommentMahomet cald the Hill to come to him. And when the Hill stood still, he was neuer a whit abashed, but said;

If the Hill will not come to Mahomet, Mahomet wil go to the hill.

Francis Bacon

Introduction

Arakoon tries to be a simple distributed key value store that favours consistency over availability.

From time to time, we get feature requests for additional commands like:

- assert_exists: assert a value exists for the key without caring what the value actually is

- increment_counter: increment (or create) a counter and return the new value.

- queue operations : add an element to the front/back of a double ended queue or pop an element

- set_and_update_X: insert a key value pair and update some non-trivial counter X (think averages, variances,…)

- …

The list is semi-infinite and the common thing here is that they are too complex/specific/weird/… to do them in one step using the provided interface. Of course, you can do all of this on the client side, but it will cost extra network round-trips. In distributed systems, you really want to keep the number of round-trips low, which pushes you towards these kind of feature requests.

Once you decided (performance bottlenecks probably) that you need extra functionality there are two things you can do. First, you can try to force or entice us into adding them to the core interface or alternatively, you can get by using Arakoon’s “user functions”. For some reason people fear them but there’s no real technical reason to do so.

This blog post will cover two things. First we’ll go in to the nitty gritty of coding and deploying user functions and then we’ll look at some of the strategic/architectural challenges of user functions.

How do user functions work?

The high level view is this: you build a user function, and register it to an Arakoon cluster before you start it. Then, at runtime, you can call it, using any client, with a parameter (a string option) and get back a result (string option). On the server side, the master will log this in its transaction log, try to reach consensus with the slave(s) and once that is the case, the user function will be executed inside a transaction. The result of that call will be sent to the client. If an exception occurs, the transaction will be aborted. Since Arakoon logs transactions it can replay them in case of calamities. This has a very important impact: since Arakoon needs to be able to replay the execution of a user function, you cannot do side effects, use random values or read the system clock.

Running Example

We’re going to try to build a simple queue API.

It will offer named queues with 2 operations: push and pop. Also, it’s a first-in-first-out thingy.

Arakoon 1

Client side API

Arakoon 1 offers the following API for user functions.

def userFunction(self, name, argument): '''Call a user-defined function on the server @param name: Name of user function @type name: string @param argument: Optional function argument @type argument: string option @return: Function result @rtype: string option '''

Let’s take a look at it. A userFunction call needs the name, which is a string, and an argument which is a string option and returns a result of type string option. So what exactly is a string option in Python? Well, it’s either a string or None. This allows a user function to not take input or to not yield a result.

Server side API

The server side API is in OCaml, and looks like this:

class type user_db = object method set : string -> string -> unit method get : string -> string method delete: string -> unit method test_and_set: string -> string option -> string option -> string option method range_entries: string option -> bool -> string option -> bool -> int -> (string * string) list end

User functions on server side match the client’s opaque signature.

user_db -> string option -> string option

Queue’s client side

Let’s create the client side in python. We’ll create a class that uses an Arakoon client and acts as a queue. The problem with push is that we need to fit both the name and the value into the one paramater we have available. We need to do our own serialization. Let’s just be lazy (smart?) and use Arakoon’s serialization. The code is shown below.

from arakoon import Arakoon

from arakoon import ArakoonProtocol as P

class ArakoonQueue:

def __init__(self, name, client):

self._name = name

self._client = client

def push(self, value):

input = P._packString(self._name)

input += P._packString(value)

self._client.userFunction("QDemo.push", input)

def pop(self):

value = self._client.userFunction("QDemo.pop", self._name)

return value

That wasn’t too hard now was it?

Queue, server side

The whole idea is that the operations happen on server side, so this will be a tat more complex.

We need to model a queue using a key value store. Code-wise, that’s not too difficult.

For each queue, we’ll keep 2 counters that keep track of both ends of the queue.

A push is merely getting the qname and the value out of the input, calculating the place where we need to store it, store the value there and update the counter for the back end of the queue. A pop is similar but when the queue becomes empty, we use the opportunity to reset the counters (maybe_reset_counters). The counter representation is a bit weird but Arakoon stores things in lexicographical order and we want to take advantage of this to keep our queue fifo. Hence, we need to make the counter in such a way the counter’s order is the same as a string’s order. The code is shown below.

(* file: plugin_qdemo.ml *)

open Registry

let zero = ""

let begin_name qname = qname ^ "/@begin"

let end_name qname = qname ^ "/@end"

let qprefix qname key = qname ^ "/" ^ key

let next_counter = function

| "" -> "A"

| s ->

begin

let length = String.length s in

let last = length - 1 in

let c = s.[last] in

if c = 'H'

then s ^ "A"

else let () = s.[last] <- Char.chr(Char.code c + 1) in

s

end

let log x=

let k s = let s' = "[plugin_qdemo]:" ^ s in

Lwt.ignore_result (Lwt_log.debug s')

in

Printf.ksprintf k x

let maybe_reset_counters user_db qname b1 =

let e_key = end_name qname in

let exists =

try let _ = user_db # get e_key in true with Not_found -> false

in

if exists

then

let ev = user_db # get e_key in

if ev = b1 then

let b_key = begin_name qname in

let () = user_db # set b_key zero in

let () = user_db # set e_key zero in

()

else

()

else ()

let push user_db vo =

match vo with

| None -> invalid_arg "push None"

| Some v ->

let qname, p1 = Llio.string_from v 0 in

let value, _ = Llio.string_from v p1 in

let e_key = end_name qname in

let b0 =

try user_db # get (end_name qname)

with Not_found -> zero

in

let b1 = next_counter b0 in

let () = user_db # set (qprefix qname b1) value in

let () = user_db # set e_key b1 in

None

let pop user_db vo =

match vo with

| None -> invalid_arg "pop None"

| Some qname ->

let b_key = begin_name qname in

let b0 =

try user_db # get (begin_name qname)

with Not_found -> zero

in

let b1 = next_counter b0 in

try

let k = qprefix qname b1 in

let v = user_db # get k in

let () = user_db # set b_key b1 in

let () = user_db # delete k in

let () = maybe_reset_counters user_db qname b1 in

Some v

with

Not_found ->

let e_key = end_name qname in

let () = user_db # set b_key zero in

let () = user_db # set e_key zero in

None

let () = Registry.register "QDemo.push" push

let () = Registry.register "QDemo.pop" pop

The last two lines register the functions to the Arakoon cluster when the module is loaded.

Compilation

So how do you deploy your user function module into an Arakoon cluster?

First need to compile your module into something that can be dynamically loaded.

To compile the plugin_qdemo.ml I persuade ocamlbuild like this:

ocamlbuild -use-ocamlfind -tag 'package(arakoon_client)' \ -cflag -thread -lflag -thread \ plugin_qdemo.cmxs

It’s not too difficult to write your own testcase for your functionality, so you can run it outside of Arakoon and concentrate on getting the code right.

Deployment

First, you need put your compilation unit into the Arakoon home directory on all your nodes of the cluster. And second, you need to add the name to the global section of your cluster configuration. Below, I show the configuration file for my simple, single node cluster called ricky.

[global] cluster = arakoon_0 cluster_id = ricky ### THIS REGISTERS THE USER FUNCTION: plugins = plugin_qdemo [arakoon_0] ip = 127.0.0.1 client_port = 4000 messaging_port = 4010 home = /tmp/arakoon/arakoon_0

All right, that’s it. Just a big warning about user functions here.

Once a user function is installed, it needs to remain available, with the same functionality for as long as user function calls are stored inside the transaction logs, as they need to be re-evaluated when one replays a transaction log to a store (for example when a node crashed, leaving a corrupt database behind). It’s not a bad idea to include a version in the name of a user function to cater for evolution.

Demo

Let’s use it in a simple python script.

def make_client():

clusterId = 'ricky'

config = Arakoon.ArakoonClientConfig(clusterId,

{"arakoon_0":("127.0.0.1", 4000)})

client = Arakoon.ArakoonClient(config)

return client

if __name__ == '__main__':

client = make_client()

q = ArakoonQueue("qdemo", client)

q.push("bla bla bla")

q.push("some more bla")

q.push("3")

q.push("4")

q.push("5")

print q.pop()

print q.pop()

print q.pop()

print q.pop()

with expected results.

Arakoon 2

With Arakoon 2 we moved to Baardskeerder as a database backend, replacing the combination of transaction logs and Tokyo Cabinet. Since the backend is Lwt-aware, this means that the server side API has become too:

module UserDB : sig type tx = Core.BS.tx type k = string type v = string val set : tx -> k -> v -> unit Lwt.t val get : tx -> k -> (v, k) Baardskeerder.result Lwt.t val delete : tx -> k -> (unit, Baardskeerder.k) Baardskeerder.result Lwt.t end module Registry: sig type f = UserDB.tx -> string option -> (string option) Lwt.t val register: string -> f -> unit val lookup: string -> f end

The major changes are that

- the api now uses Lwt

- we have (‘a,’b) Baardskeerder.result types, which we favour over the use of exceptions for normal cases.

Rewriting the queue implementation to Arakoon 2 yields something like:

(* file: plugin_qdemo2.ml *)

open Userdb

open Lwt

open Baardskeerder

let zero = ""

let begin_name qname = qname ^ "/@begin"

let end_name qname = qname ^ "/@end"

let qprefix qname key = qname ^ "/" ^ key

let next_counter = function

| "" -> "A"

| s ->

begin

let length = String.length s in

let last = length - 1 in

let c = s.[last] in

if c = 'H'

then s ^ "A"

else let () = s.[last] <- Char.chr(Char.code c + 1) in

s

end

let reset_counters tx qname =

let b_key = begin_name qname in

let e_key = end_name qname in

UserDB.set tx b_key zero >>= fun () ->

UserDB.set tx e_key zero

let maybe_reset_counters tx qname (b1:string) =

let e_key = end_name qname in

begin

UserDB.get tx e_key >>= function

| OK _ -> Lwt.return true

| NOK _ -> Lwt.return false

end >>= function

| true ->

begin

UserDB.get tx e_key >>= function

| OK ev ->

if ev = b1

then reset_counters tx qname

else Lwt.return ()

| NOK _ -> Lwt.return ()

end

| false -> Lwt.return ()

let push tx vo =

match vo with

| None -> Lwt.fail (invalid_arg "push None")

| Some v ->

let qname, p1 = Llio.string_from v 0 in

let value, _ = Llio.string_from v p1 in

Lwt_log.debug_f "push:qname=%S;value=%S" qname value >>= fun ()->

let e_key = end_name qname in

UserDB.get tx (end_name qname) >>= fun b0r ->

let b0 = match b0r with

| OK b0 -> b0

| _ -> zero

in

let b1 = next_counter b0 in

UserDB.set tx (qprefix qname b1) value >>= fun () ->

UserDB.set tx e_key b1 >>= fun () ->

Lwt.return None

let pop tx = function

| None -> Lwt.fail (invalid_arg "pop None")

| Some qname ->

begin

let b_key = begin_name qname in

UserDB.get tx (begin_name qname) >>= fun b0r ->

begin

match b0r with

| OK b0 -> Lwt.return b0

| NOK _ -> Lwt.return zero

end

>>= fun b0 ->

let b1 = next_counter b0 in

let k = qprefix qname b1 in

UserDB.get tx k >>= fun vr ->

begin

match vr with

| OK value ->

begin

UserDB.set tx b_key b1 >>= fun () ->

UserDB.delete tx k >>= function

| OK () ->

begin

maybe_reset_counters tx qname b1 >>= fun () ->

Lwt.return (Some value)

end

| NOK e -> Lwt.fail (Failure e)

end

| NOK _ ->

reset_counters tx qname >>= fun () ->

Lwt.return None

end

end

let () = Userdb.Registry.register "QDemo.push" push

let () = Userdb.Registry.register "QDemo.pop" pop

Both client side and deployment remain the same.

Questions asked

Ain’t there something wrong with this Queue?

Yes! Glad you noticed. This queue concept is fundamentally broken. The problem is the pop.

Follow this scenario:

- the client calls the QDemo.pop function

- the cluster pops the value from the queue and its master sends it to the client.

- the client dies before it can read the popped value

Now what? We’ve lost that value. Bloody network, how dare you!

Ok, I admit this was naughty, but it’s a good example of a simple local concept that doesn’t really amount to the same thing when tried in a distributed context. When confronted with this hole, people immediately try to fix this with “Right!, so we need an extra call to …”. To which I note: “But wasn’t this extra call just the thing you were trying to avoid in the first place?”

Why don’t you allow user functions to be written in <INSERT YOUR FAVOURITE LANGUAGE HERE>?

This is a good question, and there are several answers, most of them wrong. For example, anything along the lines of “I don’t like your stinkin’ language” needs to be rejected because a language’s cuteness is irrelevant.

There are several difficulties with the idea of offering user functions to be written in another programming language. For scripting languages like Python, Lua, PHP ,… we can either implement our own interpreter and offer a subset of the language, which is a lot of work with low return on investment, or integrate an existing interpreter/runtime which will probably not play nice with Lwt, or with the OCaml runtime (garbage collector). For compiled languages we might go via the ffi but it’s still way more complex for us. So for now you’re stuck with OCaml for user functions. There are worse languages.

Wouldn’t it be better if you apply the result of the user function to the transaction log iso the arguments?

Well, we’ve been thinking about that a lot before we started with user functions. The alternative is that we record and log the effect of the user function so that we can always replay that effect later, even when the code is no longer available. It’s an intriguing alternative, but it’s not a clear improvement. It all depends on the size of the arguments versus the size of the effect.

Some user functions have a small argument set and a big effect, while for other user functions it’s the other way around.

Closing words

Technically, it’s not too difficult to hook in your own functionality into Arakoon. Just make sure the thing you want to hook in does not have major flaws.

have fun,

Romain.

Caulking your distributed algorithm implementation

Posted: October 25, 2012 Filed under: algorithms, OCaml | Tags: arakoon, distributed systems, graphviz, OCaml 10 CommentsMe: Ok, all unit tests succeed.

All system tests succeed.

All acceptance tests succeed.

Release!

(some time later)

NN: We have a problem with your distributed database.

Me: Ok, what’s the matter?

NN: Once in a while the cluster seems to get stuck.

Me: Stuck how?

NN: It seems to be unable to elect a master.

Me: What did you do to get there?

NN: We don’t know, but we have the logs. Here they are. Happy hunting.

Me: $#%#&*!

(some time later)

Me: Aha, that’s what went wrong!

Introduction

The Arakoon team has spent a big part of the last two years on variations of this theme. Some of the problems turned out to configuration problems (sometimes even of other clusters), but there definitely were situations where the issue was a caused by an implementation error. As it turns out, they all have the same cause: not all possible scenarios were covered. So, is it possible to escape this vicious circle?

We already learned some things (see this previous blog post), so we use asynchronuous message passing and removed all IO from our implementation. So what’s left?

Well we have a set of distributed objects with well defined states and a set of messages that can be exchanged between them. Can’t we just generate all possible states of the distributed system and all possible messages and check how the relationships between them? This is non-trivial as the number of possible states is infinite and so is the number of possible messages. On the other hand, most state combinations are bad and inconsistent, and most messages are irrelevant… There might be something we can do.

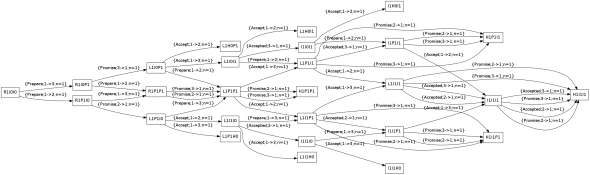

Growing a state space-ish diagram

What if we start from a consistent starting state for all objects (let’s call this the system state), generate all relevant messages that can be exchanged from that state and apply them in all possible orders. The system states that can be reached in this way from the starting state should all be consistent. If we find a state that’s not consistent, we stop. For consistent system states, we can iterate. What about inconsistent states? Well, clearly this means our algorithm is capable of doing bad things. We should check the scenario that produced this and fix it, and iterate again. Is this doable? Well, maybe… and what about simulating dropped messages?

Humble beginnings

Let’s start small. What about growing a Basic Paxos implementation for a system of three nodes? Modelling the messages should not be too difficult:

type 'v kind =

| Prepare

| Promise of 'v option

| Accept of 'v

| Accepted

type 'v message = {n:n; s:id; t:id; k:'v kind}

Each message is associated with a paxos round n, has a source s and a target t and has semantics described by its kind k. Finally there’s some value type (‘v) for the things the system should try to find consensus on. (You can find the code on Github)

Modelling the agents is a bit more work:

type 'v agent_state =

| SHalted

| SIdle

| SRequest of ('v proposal)

| SPromised of 'v option

| SLead of ('v * int) (* value, outstanding acks *)

type 'v agent = {

id : id;

pop : id list;

_n : n;

state : 'v agent_state;

store : (n * 'v) list;

}

An agent has an id, knows the other agents (pop), has a store and a current paxos round n. The interesting part is the inner state representing the role it’s playing. It can be halted or idle, requesting to become a leader for a proposal or leading an update.

Now we have messages and agents, we can model the state of the system.

type 'v network = 'v message list

type 'v agents = 'v agent list

type 'v state = { net: 'v network; ags: 'v agents}

The system state is merely a collection of agents (ags) and a network (net) representing the messages that can be delivered to the agents.

How can the state of the system change? First of all, the delivery of a message most likely will have an impact. We might add other moves later.

type 'v move = | DeliverMsg of 'v message

Generating all moves is now easy: for every message in the network, there is a move that delivers it, and since we want to stop in bad states, we don’t generate messages there:

let generate_moves state =

if is_bad state

then []

else

let deliver = List.map (fun x -> DeliverMsg x) state.net in

...

How about executing a move? There is some administration to do there.

We have a move to execute, a current state, a path that was followed to arrive at that state, and a set of observed states. If the move is the delivery of a message, we find the target agent and let him handle the message. This will change the agent’s state and produce some messages (extra).

let execute_move move state path observed =

let deliver m ag =

let agent = find_agent ag m.t in

let agent', extra = A.handle_message agent m in

let ag' = replace_agent agent' ag in

ag', extra

in

let state' =

match move with

| DeliverMsg m ->

let net' = remove m state.net in

let ags',extra = deliver m state.ags in

{ net = net' @ extra; ags = ags'}

Executing a move will cause a new system state. We will record observing the transition from the old state to the new by this move, and create the new path.

let transition = (state_label state, move, state_label state') in

TSet.add transition observed in

state' , (move,state) :: path , observed'

The whole simulator is a few functions away:

let rec check_all level state path observed =

if not (is_consistent state) then raise (Bad (path,state));

if level = limit

then observed

else

let rec loop observed = function

| [] -> observed

| move :: rest ->

let state', path', observed' = execute_move move state path observed in

let observed'' = check_all (level + 1) state' path' observed' in

loop observed'' rest

in

let moves = generate_moves state in

loop observed moves

let run state0 = check_all 0 state0 [] TSet.empty

Basically we start from an initial state, go down the tree of possible moves, execute all these and accumulate observed transitions.

Generating a diagram is trivial with Graphviz. Just iterate over the observed transitions. (not shown here, see on Github for details )

The simulation

We create 3 agents, let the first one start on a value, run our simulator from the starting state, and dottify the observed transitions.

let main () =

let ids = List.map id [1;2;3] in

let a1,a1_out = start (make_agent (Id 1) ids) "x" in

let a2,a2_out = (make_agent (Id 2) ids),[] in

let a3,a3_out = (make_agent (Id 3) ids),[] in

let world = [a1;a2;a3] in

let state0 = {net = a1_out @ a2_out @ a3_out; ags = world} in

try

let observed = M.run state0 in

M.dottify state0 observed

with (M.Bad (path, state)) ->

Printf.eprintf "bad path:\n";

M.dump_path path;

Printf.eprintf "%s\n" (state_label state)

Mark0

Let’s try this on a brain-dead simple implementation of an agent.

One that goes to the halted state as soon as it receives a message, while sending out no message at all.

module Mark0 = (struct let handle_message agent message = halt agent, [] end : ALGO)

What do we see here? First, there is a labelling scheme for states: R1I0I0 means the first agent has n=1 and is in a Requesting state, while the second and third agents ar in Idle state with n=0.

After the delivery of the {Prepare;1->2;n=1} message, a prepare from agent 1 to agent 2, the second agent halts. Likewise for the other prepare message. This looks ok, so let’s move on.

Mark1

Let’s build an agent implementation that covers the happy path.

module Mark1 = (struct

let prepare_when_idle source n agent=

let an = agent._n in

if n > an

then

let pv = None in

let agent' = {agent with state = SPromised pv; _n = n;} in

let msg = {n = n;s = agent.id;t = source; k = Promise pv } in

let out = [msg] in

agent', out

else

halt agent,[]

let promise_for_request (source:id) (mn:n) vo (proposal:'v proposal) agent =

let pv,pballot = proposal in

if mn = agent._n

then

let pballot' = Ballot.register_vote pballot source vo in

if Ballot.have_majority pballot'

then

let value = Ballot.pick_value pballot' in

let outstanding_acks = Ballot.quorum pballot' -1 in

let me = agent.id in

let targets = others agent in

let make_msg t = {n = mn; s = me; t ; k = Accept value} in

let broadcast = List.map make_msg targets in

let agent' = { agent with

store = (mn,value) :: agent.store;

state = SLead (value, outstanding_acks);

}

in

agent', broadcast

else

agent, []

else

halt agent, []

let handle_accept m v agent =

match agent.state with

| SPromised vo when agent._n = m.n ->

let agent' = {agent with state = SIdle; store = (m.n, v) :: agent.store;} in

let out = [{n = m.n;s = agent.id;t = m.s;k = Accepted}] in

agent', out

| _ -> halt agent, []

let handle_accepted m agent =

match agent.state with

| SLead (v,out) when agent._n = m.n ->

let out' = out -1 in

let state' = if out' = 0 then SIdle else SLead(v,out') in

{agent with state = state'},[]

| _ -> halt agent, []

let handle_message agent m =

match m.k with

| Prepare when agent.state = SIdle -> prepare_when_idle m.s m.n agent

| Promise vo ->

begin

match agent.state with

| SRequest p -> promise_for_request m.s m.n vo p agent

| _ -> halt agent, []

end

| Accept v -> handle_accept m v agent

| Accepted -> handle_accepted m agent

| _ -> halt agent,[]

end : ALGO)

What does this do? (click on it to see the full picture)

The good news is that there are quite a number of paths from the initial state I0I0I0 that reach our happy state I1I1I1, but there are also a lot of scenarios that end up in bad states.

Let’s look at one in detail.

R1I0I0:{Prepare;1->3;n=1} --->

R1I0P1:{Promise;3->1;n=1} --->

L1I0P1:{Accept; 1->2;n=1} --->

L1H0P1

What happened here? A Prepare message goes from agent 1 to agent 3. That agent sends a Promise back.

This causes agent 1 to become a leader and broadcast Accept messages. One of these reaches agent 1, which is clueless as it did not receive a Prepare message first. Agent 1 therefore halts.

The diagram allows us to understand scenarios that lead to bad states, and to modify the algorithm accordingly. This process of finding holes in your algorithm,patching them and iterating is something which I call caulking in absence of a better word. In this particular case, an agent that is Idle can receive an Accept for the next n and should be able to move to the Idle state at the next n.

What about dropped messages?

Earlier, I did not answer the question about the simulation of dropped messages. The above scenario should make clear that we are actually, in luck. There is no difference between that scenario and a scenario where a Prepare from agent 1 and agent 2 was dropped. In general, there is no difference between dropping a message and delaying it until it is no longer relevant. This means there is no need for us to simulate them at all!

Mark2

Let’s caulk Mark1. Looking at the diagram, not a lot of things need to be fixed. Here’s a list of messages that go awry.

- Accept;n when agent is Idle at pred n

- Accepted;n when agent is already Idle at n

- Promise;n when agent is already Leading at n

- Promise;n when agent is already Idle at n

Ok, adapting the code is easy:

module Mark2 = (struct

let prepare_when_idle source n agent=

let an = agent._n in

if n > an

then

let pv = None in

let agent' = {agent with state = SPromised pv; _n = n;} in

let msg = {n = n;s = agent.id;t = source; k = Promise pv } in

let out = [msg] in

agent', out

else

halt agent,[]

let promise_for_request (source:id) (mn:n) vo (proposal:'v proposal) agent =

let pv,pballot = proposal in

if mn = agent._n

then

let pballot' = Ballot.register_vote pballot source vo in

if Ballot.have_majority pballot'

then

let value = Ballot.pick_value pballot' in

let outstanding_acks = Ballot.quorum pballot' -1 in

let me = agent.id in

let targets = others agent in

let make_msg t = {n = mn; s = me; t ; k = Accept value} in

let broadcast = List.map make_msg targets in

let agent' = { agent with

store = (mn,value) :: agent.store;

state = SLead (value, outstanding_acks);

}

in

agent', broadcast

else

agent, []

else

halt agent, []

let handle_accept m v agent =

let _accept m =

let agent' = {agent with state = SIdle; store = (m.n, v) :: agent.store;} in

let out = [{n = m.n;s = agent.id;t = m.s;k = Accepted}] in

agent', out

in

match agent.state with

| SPromised vo when agent._n = m.n -> _accept m

| SIdle when (next agent._n) = m.n -> _accept m

| _ -> halt agent, []

let handle_accepted m agent =

match agent.state with

| SLead (v,out) when agent._n = m.n ->

let out' = out -1 in

let state' = if out' = 0 then SIdle else SLead(v,out') in

{agent with state = state'},[]

| SIdle when agent._n = m.n -> agent,[]

| _ -> halt agent, []

let handle_message agent m =

match m.k with

| Prepare when agent.state = SIdle -> prepare_when_idle m.s m.n agent

| Promise vo ->

begin

match agent.state with

| SRequest p -> promise_for_request m.s m.n vo p agent

| SLead(v,out) when agent._n = m.n -> agent, []

| SIdle when agent._n = m.n -> agent, []

| _ -> halt agent, []

end

| Accept v -> handle_accept m v agent

| Accepted -> handle_accepted m agent

| _ -> halt agent,[]

end : ALGO)

Isn’t it nice that fixing the holes in our algorithm actually makes the diagram smaller? Since we don’t end up in bad states anymore, there are way less transitions. It’s also aesthetically pleasing graphviz shows all arrows from left to right, meaning there are no transitions that actually increase the distance between the current state and the state we’re aiming for.

What about agents that are wiped clean?

This kind of calamity is not too difficult to simulate. Basically it’s a move that puts the agent back in its starting state. Let’s add the possibility that one of the agents is wiped.

let generate_moves state =

if is_bad state

then []

else

let deliver = List.map (fun x -> DeliverMsg x) state.net in

let id3 = Id 3 in

let agent = find_agent state.ags id3 in

let wipe =

if is_halted agent

then []

else [Wipe id3]

in

deliver @ wipe

Let’s try that…

./paxos.byte > mark3.dot bad path: 1 ---(1: Prepare) ---> 3 3 ---(1: Promise) ---> 1 1 ---(1: Accept) ---> 2 1 ---(1: Accept) ---> 3 Wipe 3 L1I0I0

Auch. There actually is something wrong here. As it turns out, there is a bug in the Mark2 module.

It’s this fragment that’s wrong:

let handle_accept m v agent =

let _accept m =

let agent' = {agent with state = SIdle; store = (m.n, v) :: agent.store;} in

let out = [{n = m.n;s = agent.id;t = m.s;k = Accepted}] in

agent', out

in

match agent.state with

| SPromised vo when agent._n = m.n -> _accept m

| SIdle when (next agent._n) = m.n -> _accept m

| _ -> halt agent, []

Handling an Accept when the agent is in the Idle state should also set the n correctly (the one of the message). Let’s fix that and try again.

Here it is:

Again, we’re confronted with lots of bad states but we know how to fix that. What are the scenarios that bring us in bad states? As it turns out, these are all caused by old Prepare messages. Adding that and generating the diagram again yields:

Indeed, wiping an agent moves to a state somewhere to the left of the current state, which matches the idea of being further away from our goal.

Are we there yet?

So far, we only addressed cases where there is only 1 agent in a requesting state. So what would happen if there are two agents requesting something at the same time?

Happy caulking!

Closing Remarks

Arriving at a correct implementation of an algorithm is difficult, but even more so in the case of distributed systems. This blog post shows a strategy you can apply in caulking your own implementations. As stated before, making your implementation pure helps you a lot.

Have fun,

Romain.

Tracking Asynchronous IO Using Type Systems

Posted: April 2, 2012 Filed under: OCaml, Programming, Research | Tags: async, gevent, lwt, Monads, OCaml 5 CommentsSome time ago I gave a short presentation to some colleagues of mine about the

Python gevent library, and the low-level libraries it uses to perform its job

(which mainly boils down to handle asynchronous IO and managing the microthreads

waiting for these asynchronous actions to complete, using libev or libevent as

a wrapper around select/poll/epoll and the greenlet hack to support lightweight

threads in Python).

The gevent library contains a module which monkey-patches several modules in the

Python standard library to change their synchronous nature into an asynchronous

implementation running on top of the gevent mainloop, including socket and

thread. This is required to work around one of the major pitfalls whenever

trying to use async IO in a runtime like Python: any existing code/library/…

which performs (standard) blocking calls somehow, will block the

(single-threaded, from an OS perspective) mainloop, and as such inhibit

execution of any runnable microthreads at the time (this is something where e.g.

node.js has an edge over Python when developing highly concurrent servers. Do

not fear, I won’t get into the topic of whether or not it’s a good idea to write

highly concurrent and scalable services in Python or Javascript in this post).

Next to providing a mechanism to handle concurrent IO, the use of greenlets to

manage threads of execution also introduces another useful property of gevent:

instead of threads backed by OS threads, executed in parallel on an SMP system,

using preemptive multitasking, the lightweight threads are scheduled in

userspace by the library itself, in a cooperative manner: the places at which a

thread of execution yields execution (also known as ‘switch’ in gevent) is

explicity defined inside the code (whether it’s yours or in some library). As

such one can make assumptions (or rather, assertions) about some code using

some mutable data shared across execution threads which can be thread-safe in

the cooperative settings, whilst it would be unsafe in a preempted scenario.

The latter raised an interesting question from a colleague: is there a way to

assert some code will not yield execution, i.e. some section will always be

executed as an atomic block? This is, obviously, an important consideration

when relying on this property when writing code without locks which would

become incorrect if the tread could be switched out!

I answered (maybe to their surprise) this is certainly possible and standard

practice in some languages, yet as far as I know not in Python (or, at least,

not very elegant). I didn’t get into this any further at the time, yet here’s a

post in which we will devise such tracking system.

It’s based on several concepts from the world of Functional Programming, yet

don’t fear: you don’t need any knowledge about any FP concepts at all. Read on

and enjoy the ride!

Asynchronous Programming

As you most likely heard, there are 2 ways data can be provided to something

making a request: synchronously, or asynchronously. In the prior system, the

requesting entity will wait (block) until it receives a response, then

continue execution. When using the asynchronous pattern, some code will issue a

request, then tell the system what to do when the request completed and a

result is available, and then continues working on something else, or yielding

execution to some other execution thread (please make sure to make a strong

distinction between thread of execution and system thread: there might be

some relation between these in some programming patterns, yet conceptually

they’re different beasts!).

Note there’s a major difference between execution mechanisms (sync vs. async)

and APIs: whilst some APIs explicitly expose their asynchronous execution

nature by using callbacks (think node.js, Twisted‘s Deferred type, which is a

sort of callback-on-steroids,…), others might look as if every call is a

blocking procedure call, yet internally everything is dispatched using some

asynchronous mechanism (think CPS, ‘Future‘-style APIs which get compiled

into something using CPS, or (you guessed it) systems like gevent or the IO

manager and microthreads as found in GHC‘s Haskell runtime).

The differences (or should I say, similarities?) of these styles deserve a

complete post on their own though!

Enter Types

Whenever we want to track something at a code level (which is basically, at

compile time), all we can use is what is known at this compile time: types. We

can’t rely on any values (since there are no actual values available! Go

figure: since much use of asynchronous programming is found when handling IO,

it’d be funny to know what we’ll receive on a socket at runtime while compiling

the program)!

We invent a name for the type which will be used to tag all values which we

received thanks to some sort of asynchronous call (which might introduces a

yield point): we’ll call it async. Now this is rather useless: async

what?

Those of you who toyed with generics before in Java, C#, Scala or anything

alike might think the answer is rather trivial: we create a generic type to

tag the actual type of the value we retrieved using an async call! These

generic types are also known as higher kinded type in FP, yet you shouldn’t

care too much about this for the rest of this discourse.

So, we now got our type, written as ‘a async (using OCaml notation). This is

similar to Async<A> in Java and C# (as far as I remember). For those new to

generics: think of a list of items. One could have a list of strings, one of

ints, myobjects or anything else. The type of these lists will then be string

list, int list and myobject list. We can write functions which can act on

any kind of lists (e.g. check whether the list is empty: it doesn’t matter

what type of values the list contains!). As such the list type is defined as a

more generic type which takes a type parameter: ‘a list. It’s

parametrised over type ‘a.

So, char async is a char we obtained using using some async action, and

a value of type string async is a string we got through some async call (it’s

an action which will yield a string value). Do note string async and string

are completely different types, as such it’s not (and should not be!) possible

to pass a value of type string async to a function which takes a string

value as its input. You could consider a value of type string to be a real

string value, a sequence of characters, whilst a value of type string async

is the representation of "a string value we’ll retrieve using some async call",

which is not the value itself.

Note this type comes pretty close to a Future, which is also often

parametrised (a generic class) Future<A>, which signifies "a box which will

eventually contain a value of type A".

Primitives

For our system to work, we’ll need a couple of primitive, magic operations,

provided by the system, which are the basic building blocks of everything

above. These are the operations which implement the most low-level async

procedures which can only be provided by the runtime system. Everything else

can be constructed on top of these by combining functions and actions and as

such building our applications, composing entities into larger entities.

We will only introduce 3 of these primitives, which should be sufficient for

the mechanism outlined in this post to be clear. In a real, production library

or runtime system one would need a couple of more of these primitive actions to

be able to do something interesting, of course!

Of these 3 actions, 2 work on file descriptors: one writes a given string to a

given file descriptor and doesn’t return any result (we assume the data will

always be written at once, and this will never fail, unlike the write(2)

system call, obviously), the other reads a string of requested length from a

given file descriptor (the same principle with regard to success and failure

applies).

The third action allows a thread to sleep for a given amount of time (say,

seconds, although that obviously doesn’t make any difference). The result of

this action contains the number of seconds the thread actually did sleep.

Here are the prototypes. Note unit is a type with only a single value, (),

which is used when some other languages use void (it’s not exactly the same

thing, but for now this comparison should hold):

write : file_descr -> string -> unit async read : file_descr -> int -> string async sleep : int -> int async

Keeping the Genie in the Bottle

Ok, first steps are done: we got a type to wrap values which were obtained

using an async action, and we got a couple of actions defined. Next step: doing

something useful with these operations!

Let’s start with a very simple exercise: writing an action which reads a single

character from standard input (a value named stdin of type file_descr) and

writes it to standard output (a value named stdout of type file_descr).

First, we’ll create 2 utility functions to handle standard input and output, by

using partial application (if you don’t know what this is, consider the

following example: given the function mul :: int -> int -> int which

multiplies 2 integers, we can use partial application and call mul with a

single argument, e.g. 10, which results in a function of type int -> int.

Whenever passing an argument to this function, the result will be 10 times this

argument):

let read_stdin = read stdin let write_stdout = write stdout

The types:

read_stdin : int -> string async write_stdout : string -> unit async

Now we could try to write our program:

let attempt () =

let char = read_stdin 1 in

let _ = write_stdout char in

()

Failure all over! First of all, the code will not type-check:

Error: This expression has type string async

but an expression was expected of type string

referring to the char argument on the line calling write_stdout.

Here’s why: write_stdout wants its first argument to be of type string

(this is the type of the second argument of write, as you know), but the

value we provide, named char, is not of type string: its type is

string async, the return type of the read_stdin (or further down read)

action!

Next to that, if the code would type-check, our initial goal would have

failed: the type of attempt would be unit -> unit, which doesn’t signify

we used some of the async primitives at all! Our action must return something

of type unit async, and there should be no way to write an action whose

return type is not ‘a async for some type ‘a!

Back to the drawing board… It looks like standard assignment and passing

values around as-is won’t work (remember I stressed it’s important to make the

distinction between a string value and something representing some string

retrieved using some async action of type string async?).

We dig into our FP toolkit once more, and grab another hammer (after the type

system we used earlier): function composition! Or, in this case, action

composition.

What we want is a function we can use to link two actions together into a

new action!

Let’s try to figure out some type signature for such function:

link : 'a async -> 'b async -> 'b async

link takes an initial action which yields something of type ‘a, a second

action which yields a ‘b, and combines these into an action which also yields

a ‘b async action.

But wait, this can’t be right either! In this setup, the second action still

has no way to retrieve and actually use the ‘a value as encapsulated by the

first action!

We need to extend our link function to unwrap whatever the first given action

yields, then pass it to the second argument as a proper value it can use,

whilst still making sure the fact there’s something asynchronous going on is

retained.

Here’s what the type of our link function should look like:

link : 'a async -> ('a -> 'b async) -> 'b async

The second argument now became a function (cool, uh, functions taking functions

as arguments? These are also called "higher-order functions" (remember

"higher-kinded types"?) and are very powerful) which takes a value of type

‘a, and results in an action of type ‘b async, which is then used as the

result of the link function.

Note how you can see, only by looking at the type signature, the actual ‘a

value can never be leaked out of some other ‘b async action, since that’s

what the second argument must return, and only this function ever gets access

to the plain ‘a value?

Do note we will not discuss how link is implemented, since this is

unrelated to this post, and depends on how the whole library is designed and

implemented!

Let’s get back to our single-character echo action: using link, we need an

initial action of type ‘a async. We got that: read_stdin 1 has type

string async. Ok, next we need a function of type ‘a -> ‘b async. We know

what ‘a is now, since we already decided to use read_stdin 1 as first

argument, so ‘a is string. We’re looking for a function of type

string -> ‘b async which writes the given string to the screen. This is easy:

write_stdout has exactly this type, using unit for ‘b!

Here goes:

let echo_one = link (read_stdin 1) write_stdout

The type of echo_one is unit async, like we want it to be!

From now on though, we won’t use the name link anymore: this is just

something I made up. A more common name for such function is bind, which

we’ll use from now on. Next to that, there’s an infix operator (an infix

operator is a function which takes 2 arguments and is placed in-between

these arguments instead of before them, like the + in 1 + 2) called >>=.

This allows us to rewrite our echo_one action like this:

let echo_one' = read_stdin 1 >>= write_stdout

Let’s make things more interesting: writing an action which reads 10 characters

from standard input, then sleeps maximum 2 seconds, then writes these

characters to some given file descriptor:

let sleepy_writer out =

read_stdin 10 >>= fun data ->

sleep 2 >>= fun _ ->

write out data

You might notice the use of indentation and anonymous functions, and we ignore

the result of the sleep action (we use _ as its binding name), but the

code should be easy to understand.

If this isn’t clear, here’s how to read the above snippet: we define a function

called sleepy_writer which takes a single argument called out. Upon

invocation, the function will result in 10 chars to be read from stdin.

read_stdin 10 is an action which, upon completion, will yield a string. We

bind a function which takes this string value (which we bind to the name

data in an anonymous function) and returns another action: everything starting

with sleep up until the very end of the function body. So, once read_stdin

10 has completed, we continue with this next action, which will make the

current thread of execution sleep for 2 seconds. Once again, we bind this to a

function which takes the value which the sleep 2 action yields and ignores

this (by binding it to the name _), then results in one last action which will

be executed as well, and will write the data value (which is at that moment in

scope!) to the given out file descriptor. The result of the sleepy_writer

action will be the result of the write action.

Try to figure out the type of sleepy_writer. Got it?

sleepy_writer : file_descr -> unit async

Notice we didn’t get rid of the async marker!

Finally, an action which keeps reading chunks of data of given chunk size from

a given file descriptor, then writes it to another given file descriptor:

let rec copy_stream chunk_size in_fd out_fd =

read in_fd chunk_size >>= fun data ->

write out_fd data >>= fun () ->

copy_stream chunk_size in_fd out_fd

Even though copy_stream is infinitely-recursive, its type can be calculated:

copy_stream : int -> file_descr -> file_descr -> 'a async

Yet again, the async marker sticks.

Do note, in real-world code, one should not use a top-level rec definition

but define some loop action in the body etc.

Return, But Not As You Know It

One last step in our journey is required. Up until now all actions we created

by combining other actions using bind had the result value of the last

action in this chain as their result, whilst in some actions we want to

calculate such result and return value. Due to the constraints we wanted to

achieve (and as imposed by the only function we can use to actually use

actions, bind) we can’t just use plain values, they need to be wrapped in the

async container as well. So here’s what we need: something which turns an

‘a into an ‘a async:

whatever_it_is_called : 'a -> 'a async

For some reasons, this function is mostly called return. Don’t be mistaken,

this is completely unrelated to the return statement as found in

C/Java/C#/Python/PHP/… and is no related to end the execution of a procedure

and signal some result value or anything alike. It’s a normal function to put

a value in an async box, even though this value itself was not retrieved

using some async action as-is:

return : 'a -> 'a async

Given this, we can write some more interesting actions. As a first example,

let’s write an action which reads a single line from a given file descriptor by

reading characters one-by-one until it finds an ‘n’ character, then yields

the line it read (without the newline):

let read_line fd =

let rec loop acc =

read fd 1 >>= fun char ->

if char = "\n"

then return acc

else loop (acc ^ char)

in

loop ""

If you’re not versed into FP yet, this might be somewhat hard to read and

understand at first. Take a second look and follow the execution flow manually,

it’ll become clear. It might be useful to know the ^ operator is used to

concatenate strings:

(^) : string -> string -> string

Did you try to figure out the type of read_line? It’s as intended:

read_line : file_descr -> string async

One more example: since the sleep action might return even before the

requested number of seconds has passed (don’t ask me why, I just made that up),

we want to write an action which sleeps at least the given number of seconds,

and as little more as possible (otherwise we could sleep eternally. Nice

try!). We don’t care how long we slept in the end (which is a rather stupid

requirement: a serious API would return this value, and a caller is free to

ignore this).

Here we go:

let sleep_min sec =

let rec loop left =

sleep left >>= fun slept ->

if left < slept

then return ()

else loop (left - slept)

in

loop sec

The type of sleep_min? int -> unit async.

Conclusion

Here we are! Using the ‘a async type, bind and return, we have a system

which allows us to combine and use asynchronous actions, whilst being sure we

can never forget something async is going on under the hood, no matter how

complex the actions themselves become. If we don’t see something of the

‘a async type, we can be certain nothing is using the async primitives

underneath.

Notice how we were able to implement something which gives what we wanted from

the beginning, without any specific support in the language we’re using:

only fairly standard type system requirements, and functions, as available in

several languages, including OCaml, Haskell and several others (although in

large languages without first-class functions etc. syntax might become an

issue, thinking of Java and alike).

Thanks to the use of types, the compiler can be very helpful during development

to reduce the number of potential runtime issues. Even though a system like the

above can be implemented in dynamic-typed languages like Python or Ruby, having

compile-time type checking offers a lot of safety!

Note this has been a very basic introduction, so now comes…

Going From Here

Once you reached this point, you might want to get to know more about the

mechanics behind the system outlined above. As some might have heard, bind

and return are often used in the presence of monads, and indeed, one might

think our ‘a async type is monadic (it might be, but not necessarily: the

monad laws won’t be fulfilled in the presence of real IO). Overall monads

provide a way to track "things with a tag which should always keep this tag".

The above is a very informal definition and introduction, but the interested

reader might refer to one of the many monad tutorials available on the internet

(all of varying quality and usefulness).

Next to this, reading up on functors (not the OCaml kind, but things with the

function fmap :: (a -> b) -> ‘a f -> ‘b f) could be useful as well (our

‘a async type is a functor, like any other monad:

let fmap f a = a >>= fun v -> return (f v)).

Some links:

- Monads on the Haskell wiki

- Chapter 14 in Real World Haskell

- You Could Have Invented Monads! (And Maybe You Already Have)

- The famous (and useful!) Typeclassopedia

Tracing block device write request sizes in Linux using SystemTap

Posted: March 6, 2012 Filed under: Linux, Programming, Research | Tags: kernel, linux, system, systemtap 3 CommentsRecently some people started to wonder how Arakoon, our distributed key-value store, handles the drive on which data is stored. To be more precise, this boils down to how Tokyo Cabinet (which we currently use as database implementation) submits write requests to the kernel, and how the kernel processes these later on, after translating the filesystem-level requests into write operations in the block layer.

This is considered important to know due to the usage of SSD drives (think wear leveling and other issues coming with these devices).

Tracing how the application writes data to a file can be achieved using the strace tool, looking for write(2) and pwrite(2) calls (although this is not necessarily sufficient: the application might be writing to a file using mmap(2) memory mappings).

On most loads, this won’t correspond to how block requests are sent to the actual hardware, though: writes can be buffered, reordered, etc. What we want is a way to gather information of write requests in the kernel block layer.

In Linux, one can use SystemTap (which is somewhat like DTrace on Solaris) to hook into the kernel at runtime and collect statistics, or lots of other information. One writes a script in which one hooks into trace points or function calls, then processes the incoming information.

I won’t go into the installation of SystemTap, since this will depend on the distribution you’re running. Do note you might not need root access to execute the scripts (so you shouldn’t either!): on my Fedora 16 system, all I had to do was putting my user in the stapsys, stapusr and stapdev groups (although I’m not sure I really need to be in all of those…). You’ll need debugging symbol files of your running kernel available, among other things (in my experience attempting to run a script on a system where some prerequisites are missing will result in the stap tool telling you what you need).

Writing SystemTap scripts sometimes requires some knowledge of kernel internals, to know where to hook in. Since I wanted to gather some statistics about block-layer calls, I knew I had to look for calls in the ‘bio’ subsystem, short for “Block IO”. Luckily SystemTap comes with some predefined combinations of hooks, called ‘tapsets’. These are stored (on my system at least) in /usr/share/systemtap/tapset. One of these files is called ioblock.stp, which sounds interesting. Since I was too lazy to look for documentation, I opened the file in a pager and read through it. The ioblock.request tap looked interesting, which provides access to a size value. After reading some example SystemTap scripts, here’s what I came up with:

global writes

probe ioblock.request {

if(bio_rw_num(rw) == BIO_WRITE)

writes[devname] <<< size

}

probe end {

printf("\n")

foreach([devname] in writes-) {

printf("Device: %s\n", devname)

println(@hist_log(writes[devname]))

}

}

Running this using the stap tool, and closing it after a while (using CTRL-C) gives the following output: